Check my scholar profile at

| |

| |

| |

|

「Featured Publications」 |

|

|

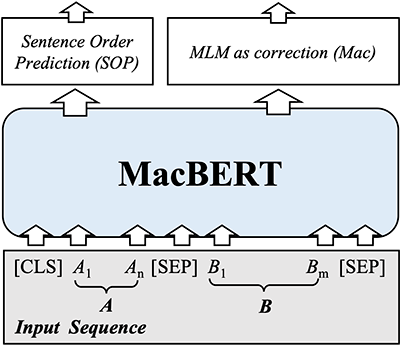

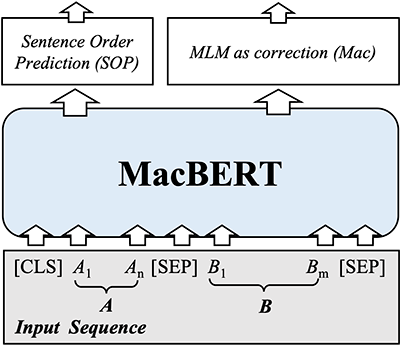

Pre-Training with Whole Word Masking for Chinese BERT |

| Yiming Cui, Wanxiang Che, Ting Liu, Bing Qin, Ziqing Yang. |

| IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), Vol.29. 2021. [J] |

| TLDR: This paper proposed a series of Chinese pre-trained language models with thorough evaluations. |

| 🎉 Been selected as one of the ESI Highly Cited Papers in Engineering by Clarivate™. |

| 🎉 Been selected as one of Top-25 Downloaded Papers in IEEE Signal Processing Society (2021-2023). |

📄 PDF

🔎 Bib

IEEE Xplore

Chinese-BERT-wwm IEEE Xplore

Chinese-BERT-wwm

|

|

| |

|

|

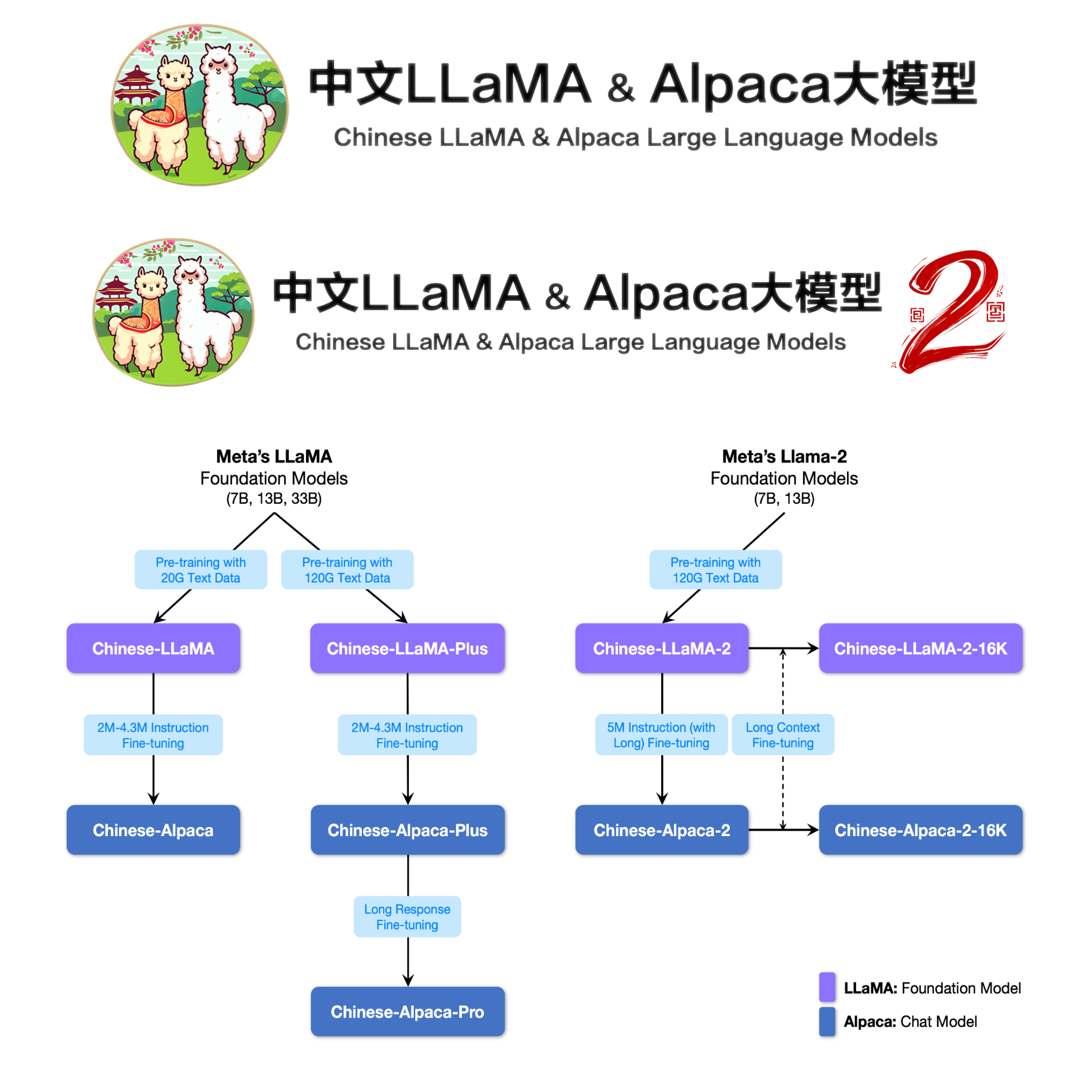

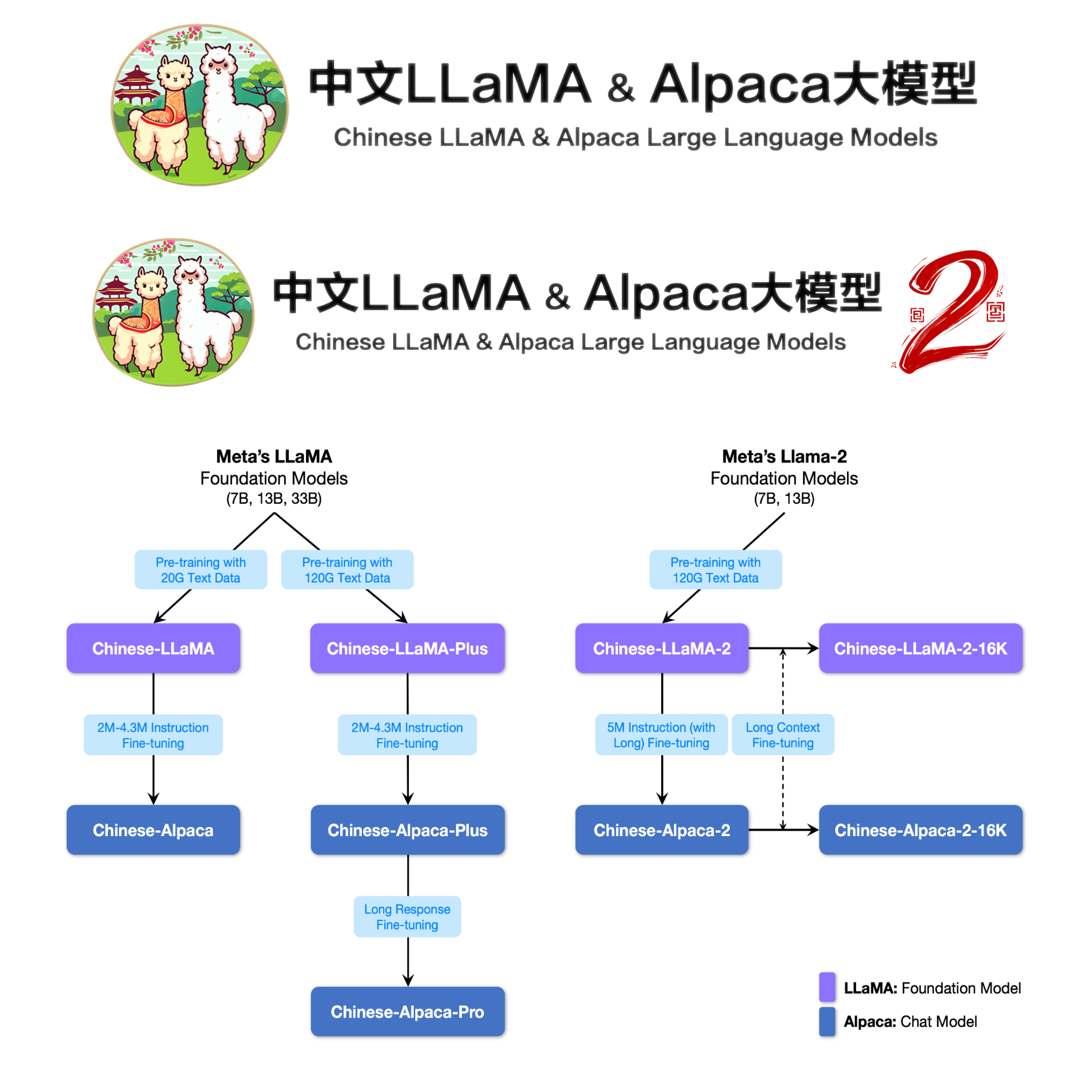

Efficient and Effective Text Encoding for Chinese LLaMA and Alpaca |

| Yiming Cui*, Ziqing Yang*, Xin Yao. |

| arXiv pre-print: 2304.08177 |

| TLDR: This paper proposes Chinese LLaMA and Alpaca models. |

| 🎉 The open-source projects have been ranked 1st place in GitHub Trending repositories. |

|

📄 PDF

🔎 Bib

arXiv

Chinese-LLaMA-Alpaca

Chinese-LLaMA-Alpaca-2

|

|

| |

|

|

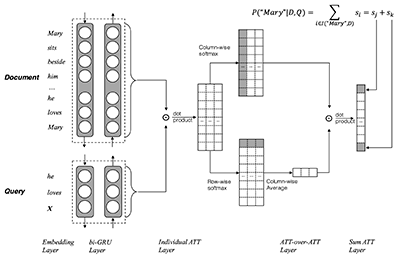

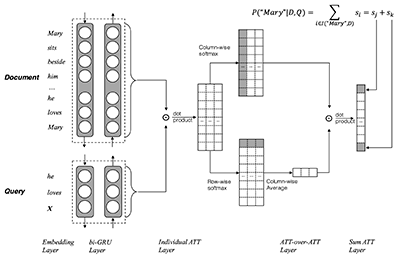

Attention-over-Attention Neural Networks for Reading Comprehension |

| Yiming Cui, Zhipeng Chen, Si Wei, Shijin Wang, Ting Liu, Guoping Hu. |

| In Proceedings of ACL 2017 [C] |

| TLDR: This paper proposes two-stream attention network (i.e., AoA) for machine reading comprehension. |

| 🎉 This paper has been selected as one of the Most Influential ACL 2017 Paper (Top 11) by Paper Digest. |

📄 PDF

🔎 Bib

🪧 Slides

ACL Anthology ACL Anthology

|

|

| |

|

|

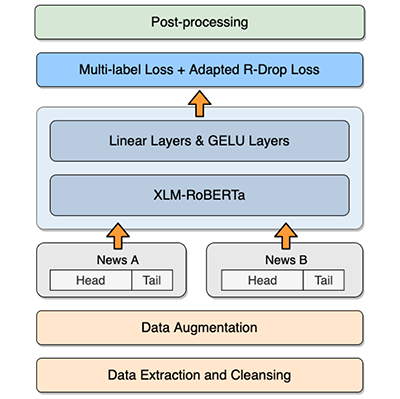

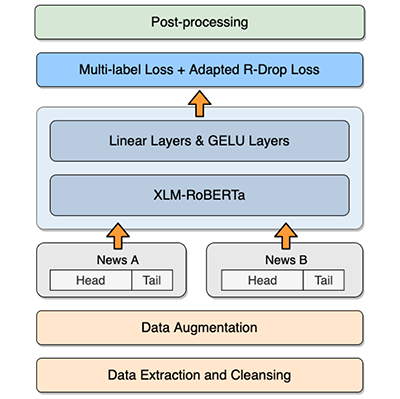

HFL at SemEval-2022 Task 8: A Linguistics-inspired Regression Model with Data Augmentation for Multilingual News Similarity |

| Zihang Xu, Ziqing Yang, Yiming Cui, Zhigang Chen. |

| The 16th International Workshop on Semantic Evaluation (SemEval 2022) [W] |

| TLDR: This paper describes our winning system of SemEval-2022 Task 8. |

| 🎉 This paper has been selected as one of the "Best Paper Honorable Mention Award" at SemEval-2022. |

📄 PDF

🔎 Bib

ACL Anthology

SemEval2022-Task8-TonyX ACL Anthology

SemEval2022-Task8-TonyX

|

|

| |

「2024」 |

Self-Evolving GPT: A Lifelong Autonomous Experiential Learner

Self-Evolving GPT: A Lifelong Autonomous Experiential Learner

-

Jinglong Gao, Xiao Ding, Yiming Cui, Jianbai Zhao, Hepeng Wang, Ting Liu, Bing Qin.

ACL 2024 [C]

🔎 Bib

arXiv

GitHub

|

Rethinking LLM Language Adaptation: A Case Study on Chinese Mixtral

Rethinking LLM Language Adaptation: A Case Study on Chinese Mixtral

|

「2023」 |

|

Efficient and Effective Text Encoding for Chinese LLaMA and Alpaca

|

|

Gradient-based Intra-attention Pruning on Pre-trained Language Models

|

|

IDOL: Indicator-oriented Logic Pre-training for Logical Reasoning

|

MiniRBT: A Two-stage Distilled Small Chinese Pre-trained Model

-

Xin Yao, Ziqing Yang, Yiming Cui, Shijin Wang.

arXiv pre-print: 2304.00717

🔎 Bib

arXiv

GitHub

|

「2022」 |

LERT: A Linguistically-motivated Pre-trained Language Model

-

Yiming Cui, Wanxiang Che, Shijin Wang, Ting Liu.

arXiv pre-print: 2211.05344

🔎 Bib

arXiv

GitHub

|

Visualizing Attention Zones in Machine Reading Comprehension Models

-

Yiming Cui, Wei-Nan Zhang, Ting Liu.

STAR Protocols, Vol.3 [J]

🔎 Bib

|

Multilingual Multi-Aspect Explainability Analyses on Machine Reading Comprehension Models

-

Yiming Cui, Wei-Nan Zhang, Wanxiang Che, Ting Liu, Zhigang Chen, Shijin Wang.

iScience, Vol.25 [J]

🔎 Bib

GitHub

GitHub

|

|

ExpMRC: Explainability Evaluation for Machine Reading Comprehension

|

Teaching Machines to Read, Answer and Explain

-

Yiming Cui, Ting Liu, Wanxiang Che, Zhigang Chen, Shijin Wang.

IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), Vol.30 [J]

🔎 Bib

arXiv

IEEE Xplore IEEE Xplore

|

PERT: Pre-training BERT with Permuted Language Model

-

Yiming Cui, Ziqing Yang, Ting Liu.

arXiv pre-print: 2203.06906

🔎 Bib

arXiv

GitHub

|

Interactive Gated Decoder for Machine Reading Comprehension

-

Yiming Cui, Wanxiang Che, Ziqing Yang, Ting Liu, et al.

ACM Transactions on Asian and Low-Resource Language Information Processing (TALLIP), Vol.21 [J]

🔎 Bib

ACM DL ACM DL

|

A Static and Dynamic Attention Framework for Multi Turn Dialogue Generation

-

Wei-Nan Zhang, Yiming Cui, Kaiyan Zhang, Yifa Wang, Qingfu Zhu, Lingzhi Li, Ting Liu.

ACM Transactions on Information Systems (TOIS) [J]

🔎 Bib

ACM DL ACM DL

|

|

TextPruner: A Model Pruning Toolkit for Pre-Trained Language Models

|

Cross-Lingual Text Classification with Multilingual Distillation and Zero-Shot-Aware Training

-

Ziqing Yang, Yiming Cui, Zhigang Chen, Shijin Wang.

arXiv pre-print: 2202.13654

🔎 Bib

arXiv

|

CINO: A Chinese Minority Pre-trained Language Model

-

Ziqing Yang, Zihang Xu, Yiming Cui, Baoxin Wang, Min Lin, Dayong Wu, Zhigang Chen.

COLING 2022 [C]

🔎 Bib

ACL Anthology

GitHub ACL Anthology

GitHub

|

HFL at SemEval-2022 Task 8: A Linguistics-inspired Regression Model with Data Augmentation for Multilingual News Similarity

-

Zihang Xu, Ziqing Yang, Yiming Cui, Zhigang Chen.

SemEval 2022 [W]

🔎 Bib

ACL Anthology

GitHub ACL Anthology

GitHub

-

🏆 This paper is recognized as "Best Paper Honorable Mention" at SemEval-2022, describes the winning system of SemEval 2022 Task 8.

|

HIT at SemEval-2022 Task 2: Pre-trained Language Model for Idioms Detection

-

Zheng Chu, Ziqing Yang, Yiming Cui, Zhigang Chen, Ming Liu.

The 16th International Workshop on Semantic Evaluation (SemEval 2022) [W]

🔎 Bib

ACL Anthology ACL Anthology

-

🏆 This paper describes the winning system of SemEval 2022 Task 2 (Subtask A, one-shot).

|

Augmented and challenging datasets with multi-step reasoning and multi-span questions for Chinese judicial reading comprehension

-

Qingye Meng, Ziyue Wang, Hang Chen, Xianzhen Luo, Baoxin Wang, Zhipeng Chen, Yiming Cui, Dayong Wu, Zhigang Chen, Shijin Wang.

AI Open, Vol.3 [J]

🔎 Bib

ScienceDirect ScienceDirect

|

「2021」 |

Pre-Training with Whole Word Masking for Chinese BERT

Pre-Training with Whole Word Masking for Chinese BERT

-

Yiming Cui, Wanxiang Che, Ting Liu, Bing Qin, Ziqing Yang.

IEEE/ACM Transactions on Audio, Speech, and Language Processing (TASLP), Vol.29 [J]

🔎 Bib

IEEE Xplore

GitHub IEEE Xplore

GitHub

-

🏆 This paper has been selected as one of the ESI Highly Cited Papers (by Clarivate™) in Engineering.

|

Adversarial Training for Machine Reading Comprehension with Virtual Embeddings

-

Ziqing Yang, Yiming Cui, Chenglei Si, Wanxiang Che, Ting Liu, Shijin Wang, Guoping Hu.

*SEM 2021 [W]

🔎 Bib

ACL Anthology ACL Anthology

|

Memory Augmented Sequential Paragraph Retrieval for Multi-hop Question Answering

-

Nan Shao, Yiming Cui, Ting Liu, Shijin Wang, Guoping Hu.

arXiv pre-print: 2102.03741.

🔎 Bib

arXiv

|

|

📚 Natural Language Processing: A Pre-trained Model Approach (自然语言处理:基于预训练模型的方法)

|

Benchmarking Robustness of Machine Reading Comprehension Models

-

Chenglei Si, Ziqing Yang, Yiming Cui, Wentao Ma, Ting Liu, Shijin Wang.

ACL 2021 (Findings) [C]

🔎 Bib

ACL Anthology

GitHub ACL Anthology

GitHub

|

Bilingual Alignment Pre-training for Zero-shot Cross-lingual Transfer

-

Ziqing Yang, Wentao Ma, Yiming Cui, Jiani Ye, Wanxiang Che, Shijin Wang.

MRQA 2021 [W]

🔎 Bib

ACL Anthology ACL Anthology

|

「2020」 |

Discriminative Sentence Modeling for Story Ending Prediction

-

Yiming Cui, Wanxiang Che, Wei-Nan Zhang, Ting Liu, Shijin Wang, Guoping Hu.

AAAI 2020 [C]

🔎 Bib

🏢 AAAI Publisher

|

|

A Sentence Cloze Dataset for Chinese Machine Reading Comprehension

|

Revisiting Pre-Trained Models for Chinese Natural Language Processing

-

Yiming Cui, Wanxiang Che, Ting Liu, Bing Qin, Shijin Wang, Guoping Hu.

EMNLP 2020 (Findings) [C]

🔎 Bib

ACL Anthology

GitHub ACL Anthology

GitHub

- 🎉 This paper has been selected as one of the Most Influential EMNLP 2020 Papers (Top 11) by Paper Digest.

|

|

CharBERT: Character-aware Pre-trained Language Model

|

Is Graph Structure Necessary for Multi-hop Question Answering?

-

Nan Shao, Yiming Cui, Ting Liu, Shijin Wang, Guoping Hu.

EMNLP 2020 [C]

🔎 Bib

ACL Anthology ACL Anthology

|

|

Conversational Word Embedding for Retrieval-based Dialog System

|

|

TextBrewer: An Open-Source Knowledge Distillation Toolkit for Natural Language Processing

|

|

Recall and Learn: Fine-tuning Deep Pretrained Language Models with Less Forgetting

|

「2019」 |

|

Cross-Lingual Machine Reading Comprehension

|

|

A Span-Extraction Dataset for Chinese Machine Reading Comprehension

|

Contextual Recurrent Units for Cloze-style Reading Comprehension

-

Yiming Cui, Wei-Nan Zhang, Wanxiang Che, Ting Liu, Zhipeng Chen, Shijin Wang, Guoping Hu.

arXiv pre-print: 1911.05960

🔎 Bib

arXiv

|

|

Convolutional Spatial Attention Model for Reading Comprehension with Multiple-Choice Questions

|

|

TripleNet: Triple Attention Network for Multi-Turn Response Selection in Retrieval-based Chatbots

|

Improving Machine Reading Comprehension via Adversarial Training

-

Ziqing Yang, Yiming Cui, Wanxiang Che, Ting Liu, Shijin Wang, Guoping Hu.

arXiv pre-print: 1911.03614

🔎 Bib

arXiv

|

|

Exploiting Persona Information for Diverse Generation of Conversational Responses

|

CJRC: A Reliable Human-Annotated Benchmark DataSet for Chinese Judicial Reading Comprehension

-

Xingyi Duan, Baoxin Wang, Ziyue Wang, Wentao Ma, Yiming Cui, Dayong Wu, Shijin Wang, Ting Liu, Tianxiang Huo, Zhen Hu, Heng Wang, Zhiyuan Liu.

CCL 2019 [C]

🔎 Bib

arXiv

GitHub

|

「2018」 |

|

Dataset for the First Evaluation on Chinese Machine Reading Comprehension

|

Context-Sensitive Generation of Open-Domain Conversational Responses

-

Wei-Nan Zhang, Yiming Cui, Yifa Wang, Qingfu Zhu, Lingzhi Li, Lianqiang Zhou, Ting Liu.

COLING 2018 [C]

🔎 Bib

ACL Anthology ACL Anthology

|

HFL-RC System at SemEval-2018 Task 11: Hybrid Multi-Aspects Model for Commonsense Reading Comprehension

-

Zhipeng Chen, Yiming Cui*, Wentao Ma, Shijin Wang, Ting Liu, Guoping Hu.

arXiv pre-print: 1803.05655

🔎 Bib

arXiv

|

A Car Manual Question Answering System based on Neural Network (基于神经网络的汽车说明书问答系统)

-

Le Qi, Yu Zhang, Wentao Ma, Yiming Cui, Shijin Wang, Ting Liu.

Journal of Shanxi University (Natural Science Edition) [J]

🔎 Bib

|

「2017」 |

Attention-over-Attention Neural Networks for Reading Comprehension

Attention-over-Attention Neural Networks for Reading Comprehension

-

Yiming Cui, Zhipeng Chen, Si Wei, Shijin Wang, Ting Liu, Guoping Hu.

ACL 2017 [C]

🔎 Bib

🪧 Slides

ACL Anthology ACL Anthology

- 🎉 This paper has been selected as one of the Most Influential ACL 2017 Papers (Top 11) by Paper Digest.

|

The Brilliant Chinese Achievements in SQuAD Challenge (斯坦福SQuAD挑战赛的中国亮丽榜单)

-

Yiming Cui, Ting Liu, Shijin Wang, Zhipeng Chen, Wentao Ma, Guoping Hu.

Communication of CCF [M]

🔎 Bib

|

|

Generating and Exploiting Large-scale Pseudo Training Data for Zero Pronoun Resolution

|

「2016」 |

Consensus Attention-based Neural Networks for Chinese Reading Comprehension

Consensus Attention-based Neural Networks for Chinese Reading Comprehension

|

|

LSTM Neural Reordering Feature for Statistical Machine Translation

|

「Before 2016」 |

Augmenting Phrase Table by Employing Lexicons for Pivot-based SMT

-

Yiming Cui, Conghui Zhu, Xiaoning Zhu, Tiejun Zhao.

arXiv pre-print: 1512.00170

🔎 Bib

arXiv

|

Context-extended Phrase Reordering Model for Pivot-based Statistical Machine Translation

-

Xiaoning Zhu, Tiejun Zhao, Yiming Cui, Conghui Zhu.

IALP 2015 [C]

🔎 Bib

IEEE Xplore IEEE Xplore

|

The USTC Machine Translation System for IWSLT2014

-

Shijin Wang, Yuguang Wang, Jianfeng Li, Yiming Cui, Lirong Dai.

IWSLT 2014 [W]

🔎 Bib

ACL Anthology ACL Anthology

|

Phrase Table Combination Deficiency Analyses in Pivot-based SMT

-

Yiming Cui, Conghui Zhu, Xiaoning Zhu, Tiejun Zhao, Dequan Zheng.

NLDB 2013 [C]

🔎 Bib

Springer Springer

|

|

The HIT-LTRC Machine Translation System for IWSLT 2012

|

|

Notations:

[J]ournal,

[C]onference,

[W]orkshop,

[B]ook,

[M]agazine

|

|

|