Attention-over-Attention Neural Networks for Reading Comprehension

Published in ACL 2017, 2017

Authors

Yiming Cui, Zhipeng Chen, Si Wei, Shijin Wang, Ting Liu, Guoping Hu

Highlights

- 🎉 This paper has been selected as one of the Most Influential ACL 2017 Paper (Top 11) by Paper Digest.

Resources

📄 PDF

🔎 Bib

🪧 Slides

ACL Anthology

Abstract

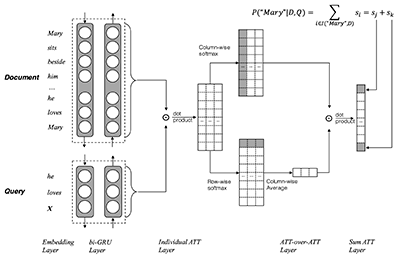

Cloze-style reading comprehension is a representative problem in mining relationship between document and query. In this paper, we present a simple but novel model called attention-over-attention reader for better solving cloze-style reading comprehension task. The proposed model aims to place another attention mechanism over the document-level attention and induces “attended attention” for final answer predictions. One advantage of our model is that it is simpler than related works while giving excellent performance. In addition to the primary model, we also propose an N-best re-ranking strategy to double check the validity of the candidates and further improve the performance. Experimental results show that the proposed methods significantly outperform various state-of-the-art systems by a large margin in public datasets, such as CNN and Children’s Book Test.